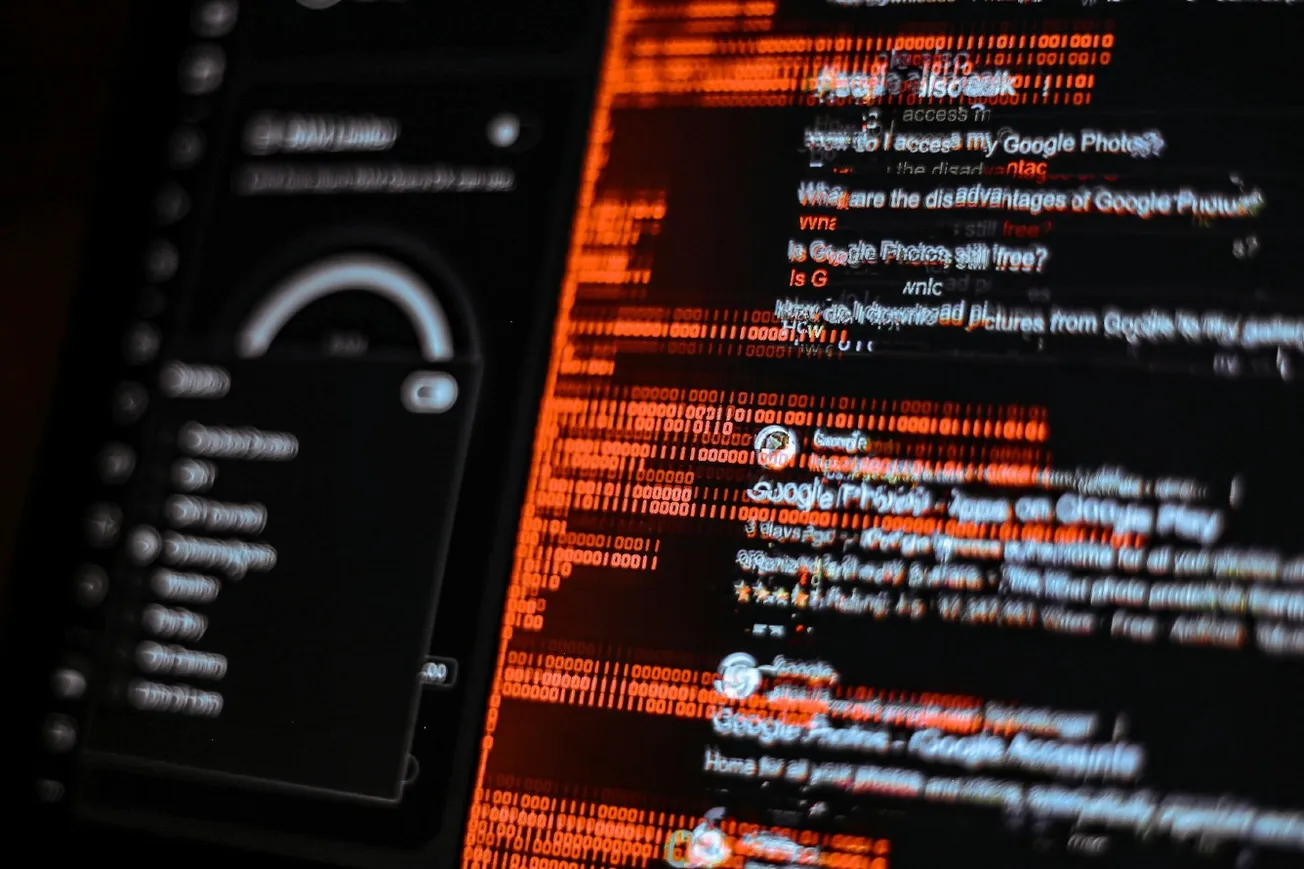

Google researchers have uncovered the first known case of AI-powered malware being used in real-world cyberattacks, signaling a new era of AI-driven hacking. The company’s Threat Intelligence Group identified two new malware strains — PromptFlux and PromptSteal — that use large language models (LLMs) to modify their behavior mid-attack.

Hackers are already using AI-enabled malware, Google says https://t.co/sw8HGzYBcO

— Axios (@axios) November 5, 2025

PromptFlux, discovered through Google’s VirusTotal tool, can rewrite its own code using Gemini, disguise malicious activity, and spread across connected systems.

Meanwhile, PromptSteal, reportedly used by Russian military hackers against Ukrainian entities, relies on an open-source model that allows attackers to issue text prompts to control it.

Google warns of new AI-powered malware families deployed in the wild https://t.co/71NoCxhkoJ #Security #ArtificialIntelligence

— The Cyber Security Hub™ (@TheCyberSecHub) November 5, 2025

Although both strains are still in early development, Google warned they represent a major step forward in weaponizing generative AI.

The report adds that the underground cybercrime market for AI tools has grown rapidly, making it easier for less-skilled hackers to conduct sophisticated attacks.

Also read: