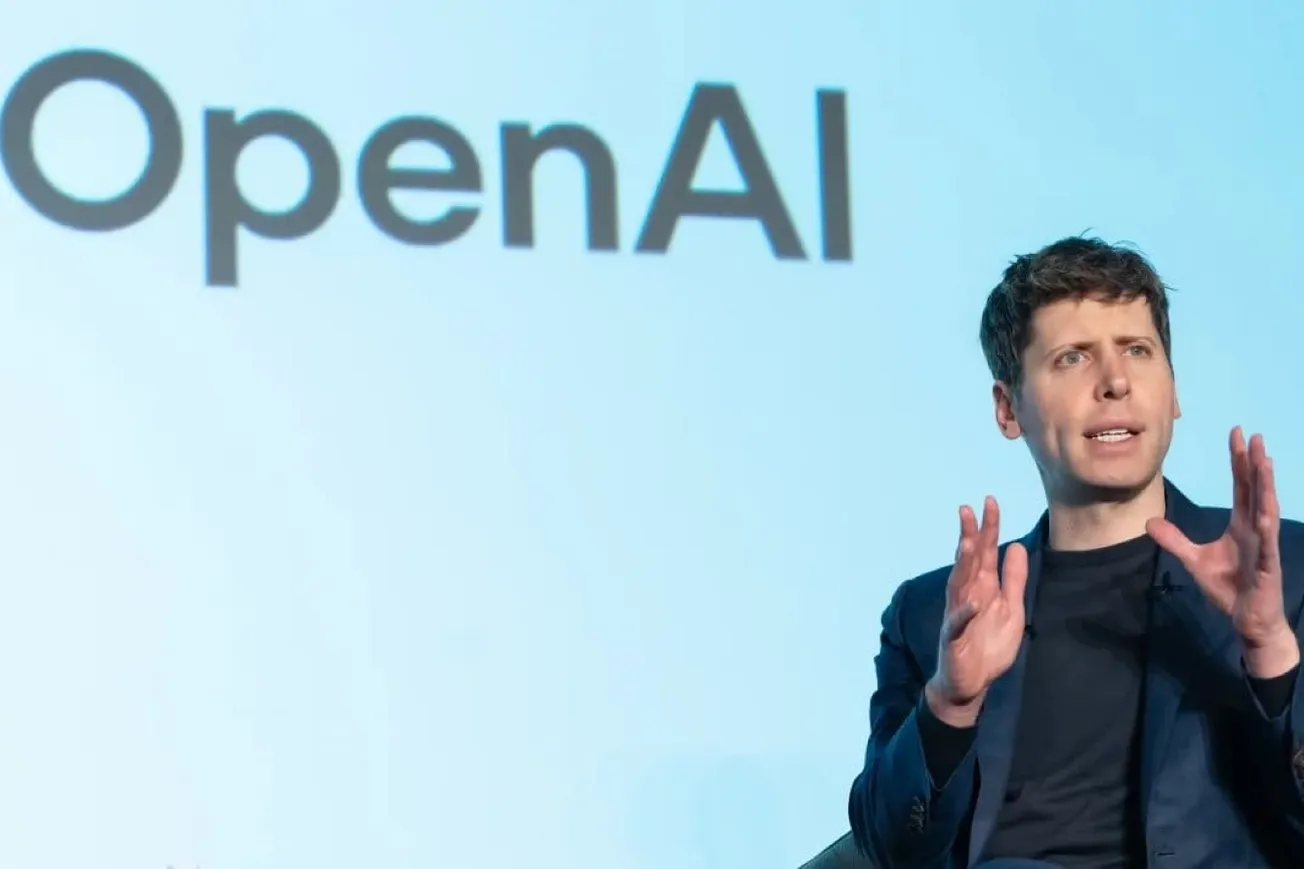

By Mustafa Suleyman, Project Syndicate | Sep 15, 2025

Debates about whether AI truly can be conscious are a distraction. What matters in the near term is the perception that they are – and why the temptation to design AI systems that foster this perception must be resisted.